Turn your laptop into an AI PC — how to run an AI chatbot locally on your PC

Your AI chatbot may be misusing your chats — run it locally on your PC instead

Sign up to receive The Snapshot, a free special dispatch from Laptop Mag, in your inbox.

You are now subscribed

Your newsletter sign-up was successful

Generative AI chatbots and AI PCs are dominating conversation in the laptop world this year. For most of us, a Generative AI chatbot is yet another browser tab. All we need to access services like ChatGPT is an internet-connected device — we don’t have to worry about hosting their endless flops of computing power or footing their sizable electricity bills. But these perks, like trusting companies with our sensitive data, come at a cost. Have you considered running an AI chatbot locally on your computer instead?

You don't need one of the new Copilot+ PCs to make this happen, whether you use a MacBook, a Windows laptop, or even a Linux laptop you can join in the AI PC fun, and best of all do it without sharing your data with Open AI, Microsoft, Google, or any other big AI company.

I'm going walk you through why you might want to run an offline AI chatbot on your laptop, then we'll get into the details of how to get it installed on your laptop today, and finally what the impact of accessible AI on your laptop could mean in the future.

Why should you run an offline AI chatbot?

When you interact with a chatbot on a website, the company behind it controls the experience, and in turn, your conversations. More often than not, companies like OpenAI train their AI models on your data, which should give you pause when considering sharing personal details. For example, if you are looking to leverage an AI to budget your finances, it’s best to not share any sensitive documents with a web-based AI.

In one instance, the chat histories of several ChatGPT users were compromised and appeared inside other, unrelated accounts. Though you can disable your chat histories, requesting AI companies not to use your information to train its models is nearly impossible.

Plus, an online chatbot suffers from the typical challenges of any internet service. Over the past year alone, popular platforms such as ChatGPT, and Microsoft Copilot, have gone down for extended periods, leaving people who rely on them stranded. Because of these websites’ reach, their creators have to impose endless restrictions on the questions they can answer to ensure they’re not misused. Therefore, if you want to research a sensitive topic for educational purposes, you’ll be out of luck.

Then there’s the pricing. Several online chatbots’ high-end features are locked behind a hefty paywall. Their free versions, in comparison, not only limit how much you can use them but also exclude access to premium tools, including document analysis and image generation.

Sign up to receive The Snapshot, a free special dispatch from Laptop Mag, in your inbox.

How to run an AI chatbot locally on your PC, Mac, or Linux computer

To run an AI chatbot on your Windows, macOS, or Linux computer, all you need is a free app called Jan. It allows you to install an open-source model like Meta’s Llama 3 on your machine and interact with it from a familiar chat window. What I especially like about Jan’s interface is that it’s approachable and breaks down the various technical steps in the process for layman users.

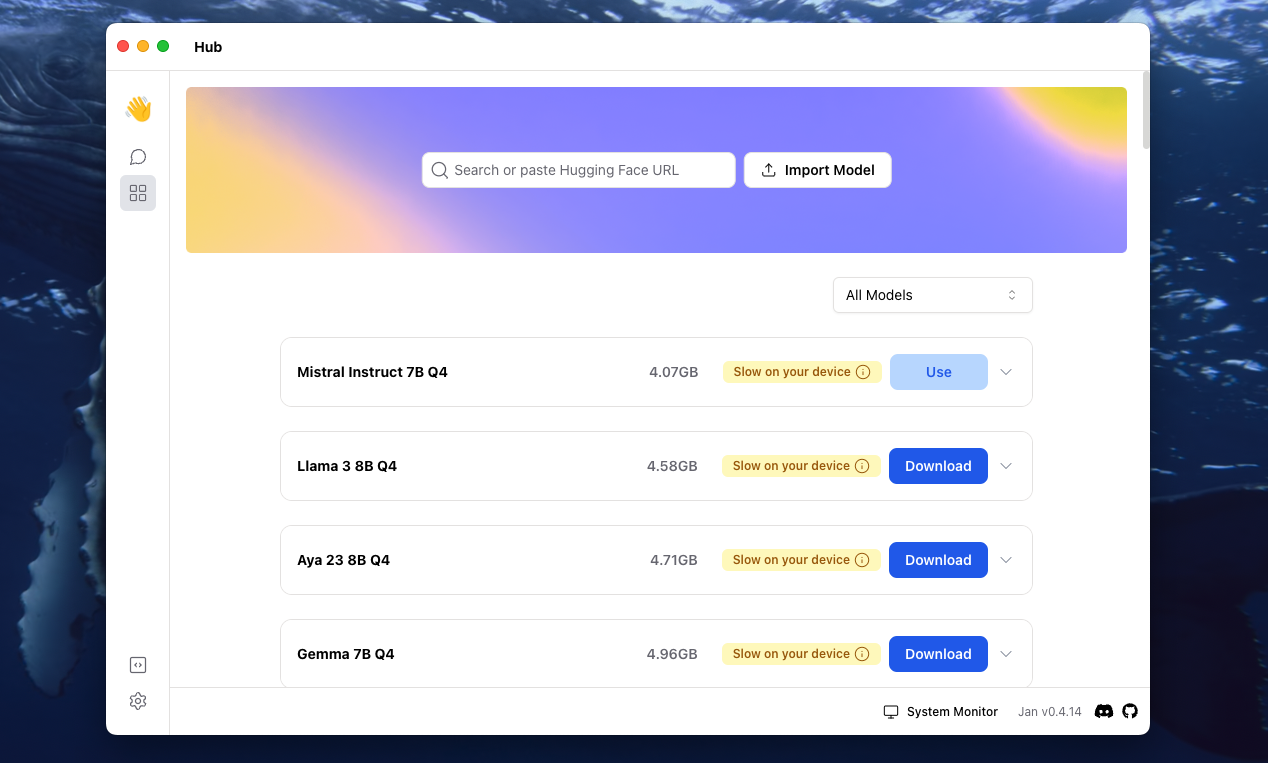

For example, one of the first steps during setup is selecting one of the available AI models for download. Jan will tell you which ones will run slow on your PC’s configuration, what each does well like general-purpose tasks and document analysis, and more. You also have the option to import models that aren't currently listed in Jan’s catalog.

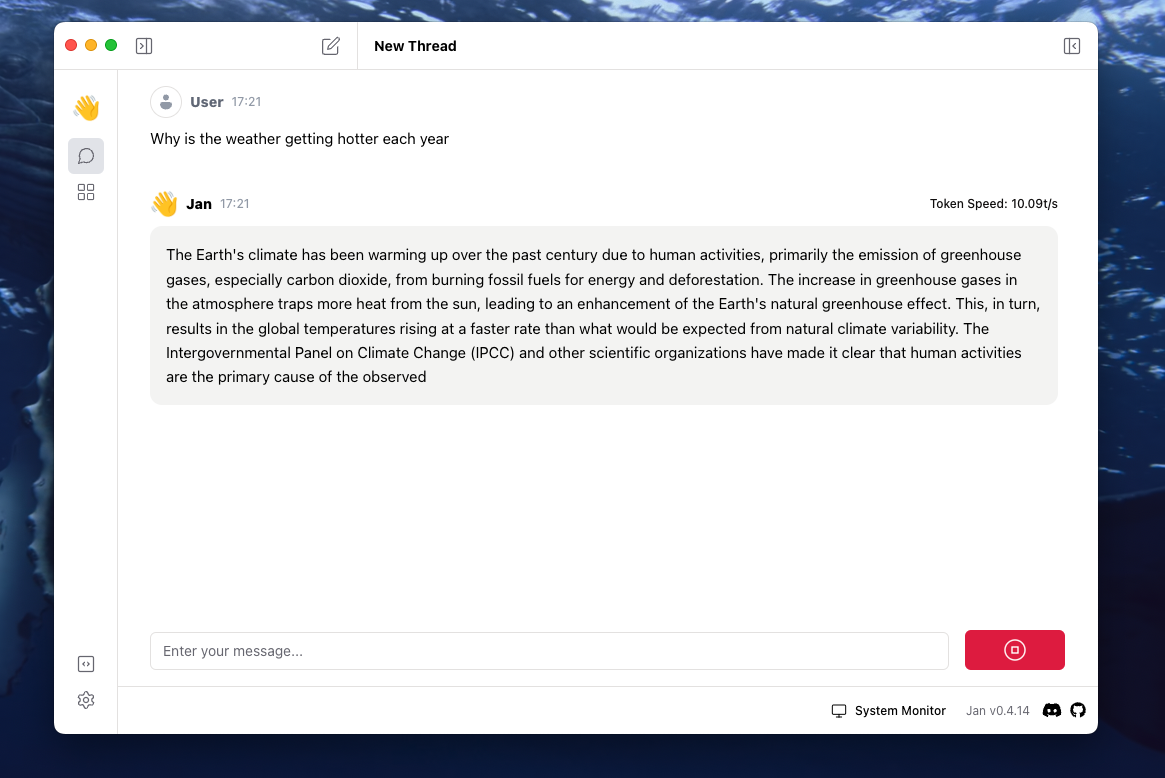

Another highlight of Jan is that it lets you customize the parameters of each of your chat threads. Before you kick off a conversation, you can specify instructions for how the chatbot should behave and how many computing resources it should utilize.

Once you’ve downloaded Jan AI from its website, fire up the app, and from the left sidebar, head into the “Hub” section. Select your preferred AI model based on the recommendations and Jan will download it if necessary. Each will be at least a few GBs, so if you are on a slow or metered connection keep that in mind. After that, select the “Use” button and it will create a new chat window. You can fine-tune the thread to your preferences from the “Threads Settings” sidebar.

On an entry-level computer with 8GB of RAM like my M1 Mac Mini, it’s best to close all your programs before you activate a model in Jan as it will take at least 70-80% of your computer’s memory. On several occasions when I asked multi-layered questions, it even brought my Mac to a halt and took a couple of solid minutes to respond. Therefore, if you plan to actively use a local chatbot with other software, you want a higher end PC, ideally with a dedicated GPU.

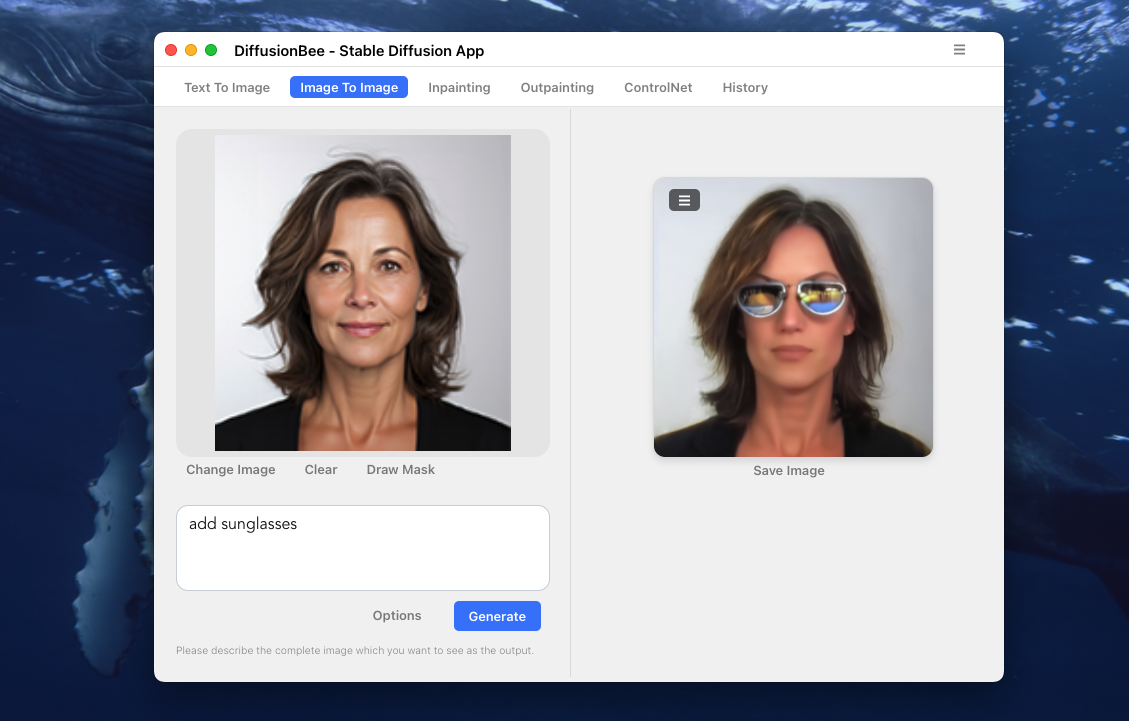

Jan AI is best suited for text-based interactions. However, as long as your computer has the bandwidth (at least 16GB+ RAM), you can also analyze documents and generate images locally. For the former, you can try GPT4All, and for the latter, Diffusionbee.

What do local chatbots mean for you and the future of AI?

Setting up an AI chatbot to work offline on your computer doesn’t take long. Many new desktop programs make the process as simple as downloading an app. Once you have it up and running, you can use it like you would any other online service except in this case, the information and files you share will never leave your device.

The upsides don’t end there. Because these desktop programs configure open-source AI models — available for free from companies like Meta, Apple, and many more — right on your PC or Mac’s local storage, there’s no cap on how many questions you can ask them. You can also personalize them fit to your needs and set them up to always answer and act from a specific point of view.

More importantly, since local AI apps are backed by the open-source community, you have a variety of tech to select from. Those looking to pick an AI’s brain for math queries can choose one optimized for that task, while those who want to dissect long documents can go for another model fine-tuned for this particular task.

At the same time, there’s a reason why AI services don’t usually run offline. The remote servers they are typically stored on are powered by advanced GPUs and computers that can process machine-learning requests in seconds. Most of our laptops are less capable of handling AI tasks and won’t be as snappy as ChatGPT in responding.

It’s no surprise, then, that in the last year, tech giants, from Apple to Microsoft, have been busy building smaller and more efficient AI models (and processors) that can comfortably run on all kinds of computers and leverage the endless benefits of each person having their own, local chatbot. This will also make it possible for AI to anticipate your needs on your phones and computers, whether that’s by offering more context about what’s on your screen or letting you review a history of all your activities on your PC.

While there are certainly tremendous advantages to web-based AI chatbots, for those who value privacy or simply don't want to pay for these services, installing a local AI chatbot could be precisely the AI PC experience you've been waiting for.

MORE FROM LAPTOP MAG

- Windows 11's AI Recall feature can run on non-Copilot+ PCs: Here's how

- Best laptop deals in February 2024

- Computex 2024: Qualcomm, Acer, Asus, MSI, dates, and keynote news

Shubham Agarwal is a freelance technology journalist from Ahmedabad, India. His work has previously appeared in Business Insider, Fast Company, HuffPost, and more. You can reach out to him on Twitter.