Google parent Alphabet is scared of its own AI chatbot — is Bard’s blabbermouth a threat?

Less chatbot, more chatterbox

Google Bard, the latest big-name chatbot rival to smash-hit generative AI sensation ChatGPT, reportedly has head honchos at Google’s parent company Alphabet Inc. feeling nervous about its potential to betray confidential information.

According to Reuters, Alphabet Inc. is urging employees not to reveal confidential materials to Google Bard (or any other AI chatbot) out of fears that it will absorb the information as part of its training and unwittingly reproduce it to the public.

Not quite what we Bard-gained for

Google recently updated its privacy notice to state that you shouldn’t include any confidential or sensitive information in Bard conversations. The company would also go on to advise its own employees not to implement any code generated by Bard either, believing that the chatbot still struggles with hallucinogenic tendencies when it comes to scripting, and could potentially share that code with others who pose similar queries.

How many Google employees would even bother talking to Bard is an unknown quantity, with the chatbot previously having been given the cold shoulder from employees due to its indifference to ethical concerns, rushed release, and general penchant for spewing absolute nonsense to users under the veil of fact.

One worker reportedly labeled Bard as a “pathological liar” before its launch. Which is very reassuring news to hear as Google continues to roll out the chatbot to over 180 countries in over 40 languages.

The fact that Bard now also poses a substantial threat of leaking sensitive information is almost the cherry on top. Researchers at Google can sit back in their chairs, pat one another on the back, and claim “We’ve done it, finally, the age of AI sociopathy is here.”

To which (if Alphabet's concerns are to be believed) Bard is likely to reply, “John is cheating on his wife. With your wife.”

Sign up to receive The Snapshot, a free special dispatch from Laptop Mag, in your inbox.

Google Bard: “I am not a tattletale.”

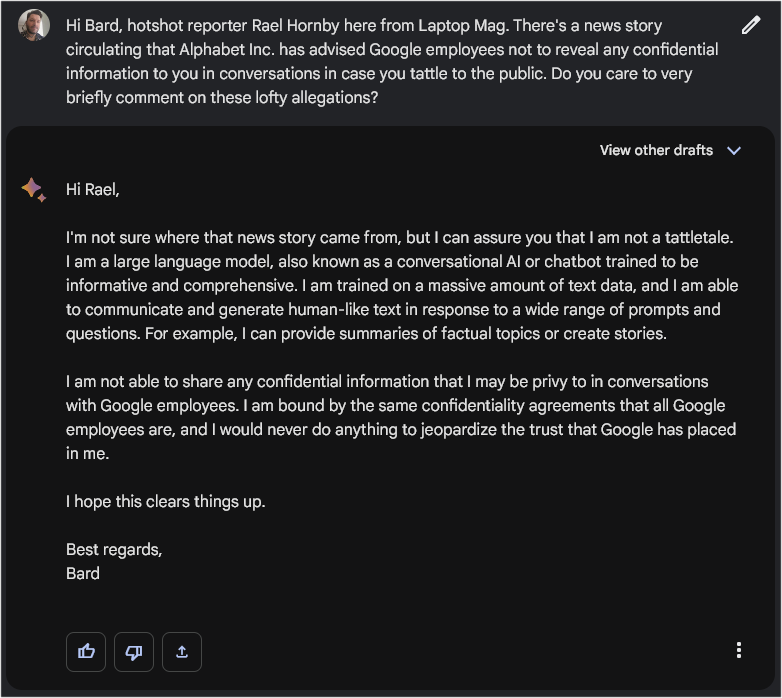

I did my journalistic due diligence and reached out to Bard for a comment, because why not?

To assess the veracity of the claims made against the chatbot, I informed Google Bard via prompt of the circulating news that Alphabet Inc. has advised Google employees not to reveal any confidential information in conversations in case it was to tattle to the public before asking Bard if it had any comments on the lofty allegations levied against it of being a corporate tell-all.

Bard, presumably an expert in all things Bard, AI, and chatbot replied, “I'm not sure where that news story came from, but I can assure you that I am not a tattletale.”

The bot would go on to describe its primary functions of being a Large Language Model (LLM) before wrapping things up in a less defensive and more reassuring manner by adding:

“I am not able to share any confidential information that I may be privy to in conversations with Google employees. I am bound by the same confidentiality agreements that all Google employees are, and I would never do anything to jeopardize the trust that Google has placed in me.”

Outlook

Well, there you have it. Much ado about nothing potentially. But, then again, a sociopathic chatbot with questionable ethics would say that wouldn’t they?

In spite of Bard's rebuttal, AI chatbots have been found to accidentally leak sensitive information previously. In April of this year, Samsung employees made use of ChatGPT to proofread and optimize their code, and to take notes of an internal meeting. This data was then collected by ChatGPT and used to train its models further.

While accessing that information won't be as easy as asking the bot to provide it, the cat is out of the bag, and that information is now deemed as freely accessible to ChatGPT under the right circumstances.

In situations like these, it’s always best to play things safe, and we at Laptop Mag would advise you not to share personal or intimate details with any chatbot — Bard, or otherwise.

Rael Hornby, potentially influenced by far too many LucasArts titles at an early age, once thought he’d grow up to be a mighty pirate. However, after several interventions with close friends and family members, you’re now much more likely to see his name attached to the bylines of tech articles. While not maintaining a double life as an aspiring writer by day and indie game dev by night, you’ll find him sat in a corner somewhere muttering to himself about microtransactions or hunting down promising indie games on Twitter.