Google makes a bold pitch for an all-encompassing AI: "Project Astra"

At Google I/O, its annual new technology summit, Google again brought out Project Astra, an AI that becomes the center of your universe.

Sign up to receive The Snapshot, a free special dispatch from Laptop Mag, in your inbox.

You are now subscribed

Your newsletter sign-up was successful

During Tuesday's Google I/O conference at Shoreline Amphitheater in Mountain View, California, company leaders showcased a series of new products, including AI Mode for Google Search and Android XR.

The AI assistant Project Astra, which first appeared last year at I/O, is by far the most fascinating and/or scary, depending on your views on AI. MIT Technology Review has written that Project Astra "could be generative AI’s killer app."

Set to compete directly with Apple’s Apple Intelligence, the celestial-named Astra represents a constellation of different products and platforms, including Google Gemini AI, AndroidXR, and what the company is calling Action Intelligence.

These tools are designed to provide a seamless experience between you and the information you want on the web.

What is Project Astra, and how does it work?

During the two-hour presentation, in another entry to the growing category of “my hands are full and I need to use the internet right now” promotional materials, we were introduced to a character working on a bike.

The scenario — watch it in full — is that he needs to know how to replace a part, but his hands are covered in chain grease! (All of these spots so far can’t seem to escape the As Seen on TV black-and-white “does this ever happen to you” vibe.)

Given this common, everyday predicament, he asks Gemini to search the web for a user manual instead of picking up his phone.

Sign up to receive The Snapshot, a free special dispatch from Laptop Mag, in your inbox.

After finding the manual, Gemini offers to read through the PDF file and find the information our amateur bike mechanic was on the hunt for. After that, he asks Gemini to call a local bike shop for him and order a part for pickup. Finally, after the mechanic runs into a stripped screw, he asks Gemini to find and open a YouTube video that tells him how to fix it.

In all of these scenarios, Gemini and Action Intelligence showcase complex use cases that have baffled most large action models (or LAMs, not to be confused with LLMs), including Apple Intelligence.

These actions include reading and interfacing with web pages, automated calling, and content retrieval.

How is Action Intelligence different from Apple Intelligence or Microsoft Copilot?

If Copilot is your copilot as you fly through your life, it seems Project Astra wants to be the sun in your personal solar system.

Though Apple beat Google to the punch with the launch of Apple Intelligence in October of last year, many users have been disappointed with the feature so far.

While the commercials make it seem like a supercharged Siri, in practice, many people haven’t found it useful for much more than summarizing emails and getting queries to ChatGPT a little bit faster.

Microsoft Copilot and Copilot+, the AI services that can be your generative AI assistant on Windows laptops or on your phone, integrate into Microsoft 365 applications, remembering how you use them. Still, Google's Project Astra seemed several levels beyond and more deeply integrated into everything you do online.

If Copilot is your copilot as you fly through your life, it seems Project Astra wants to be the sun in your personal solar system.

It's difficult to see how the presentation wasn't a pitch for the biggest AI transformation that a company has ever made to consumers.

Meanwhile, Google's Action Intelligence finally brings the promise of a fully-powered AI assistant made for years by various companies. This includes startups like Teenage Engineering, makers of the Rabbit R1, which never came close to releasing a functional product, and giants like Apple, which seem to be making incremental, rather than revolutionary, gains toward the goal.

If Google’s Action Intelligence works as reported, it could represent a sea change in this market. An AI that can recognize web pages, use apps, and control every aspect of your phone with just a prompt could be a game-changer.

Astra + AndroidXR = A data hoarder’s dream

Also announced during Tuesday's keynote was Google’s upcoming integration with a prototype pair of mixed reality glasses, the XREAL Ones, using its new AndroidXR platform. It's called Project Aura.

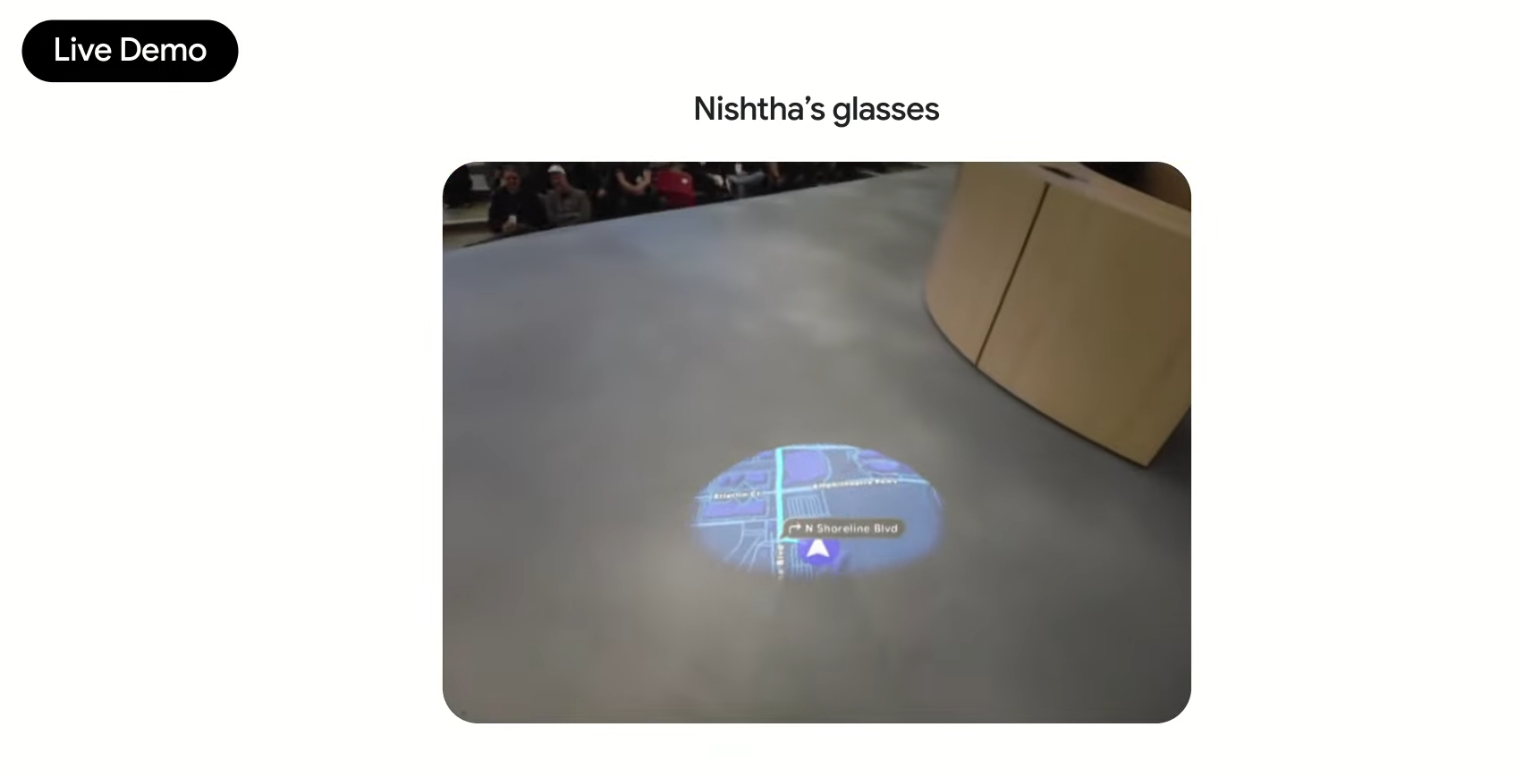

Before you get flashbacks, just know this ain’t your grandma’s Google Glass. Google showed off the capabilities of its new glasses in a live — and therefore not without the expected jank — tech demo, which included issuing a series of voice commands while Gemini and Action Intelligence handled the heavy lifting.

Perhaps as a response to Meta’s recent reveal of its prototype Project Orion mixed-reality glasses, Google wasn’t to be outdone.

While Mark Zuckerberg showed off a series of interesting use cases and games for its glasses during a keynote last year, Android XR was the first time we saw a full-fledged mixed reality, well, reality.

This included visual confirmation of sent texts, asking Gemini to recognize bands from some pictures on the wall, and controlling a music app. The glasses also displayed full heads-up maps and directions in real time.

The full integration of Android XR showed Google’s intent that, much like a smartwatch, they want you on your phone less and present in the world more.

But don’t mistake this utopian XR dream for altruism. Like Meta’s play into the VR market and its push of RayBan Meta Wayfarer sunglasses with integrated cameras and microphones, what will likely be a costly piece of hardware will likely be subsidized by collecting more user data.

With a camera and a pair of microphones on your head all day long, Google may finally be freed from the confines of your pocket or a purse. All the time your camera used to spend in the dark, not gathering data, will now be spent examining every aspect of your natural life as Google’s backend figures out how to use the gathered information to sell products back to you.

When does Project Astra launch?

Google hasn’t confirmed a launch date for Astra nor indicated which phones in the Android ecosystem would support the feature. For now, a loose date of sometime in 2025 has been established, and the company says Astra will be rolling it out in waves to select Android users over time.

As for the XREAL smart glasses and AndroidXR’s integration with Astra, the “Prototype Only” stuck to the bottom of today’s stream should tell you all you need to know there. Neither the glasses nor the Android XR platform has any solid release dates.

Given the many glitches we saw during the tech demo, Google likely still has a way to go before it is confident in establishing a release schedule.

Ultimately, what Google presented today is the company’s vision for an all-encompassing AI future. One where any part of the web, whether in a PDF or a YouTube video, is accessible with voice requests alone. Where your interaction with the online world is a conversation had out loud, you never pull out your phone again, and your thumbs can finally take a well-earned rest.

But, whether Astra works as Google promises out of the box—or we’re just in for another series of Apple Intelligence-level shortfalls—remains to be seen.

More from Laptop Mag

Chris Stobing grew up in the heart of Silicon Valley and has been involved with technology since the 1990s. Previously at PCMag, I was a hardware analyst benchmarking and reviewing consumer gadgets and PC hardware such as desktop processors, GPUs, monitors, and internal storage.

He's also worked as a freelancer for Gadget Review, VPN.com, and Digital Trends, wading through seas of hardware and software at every turn. In his free time, you’ll find Chris shredding the slopes on his snowboard in the Rocky Mountains where he lives, or using his culinary-degree skills to whip up a dish in the kitchen for friends.