Intel's newest chiplet design could revolutionize AI computing — again

It's just a prototype for now, though

Sign up to receive The Snapshot, a free special dispatch from Laptop Mag, in your inbox.

You are now subscribed

Your newsletter sign-up was successful

Intel has a new chiplet design that could revolutionize AI computing in the near future.

In a presentation by Intel's Integrated Photonics Solutions group, Intel showcased the new design at the Optical Fiber Communication Conference (FC) 2024.

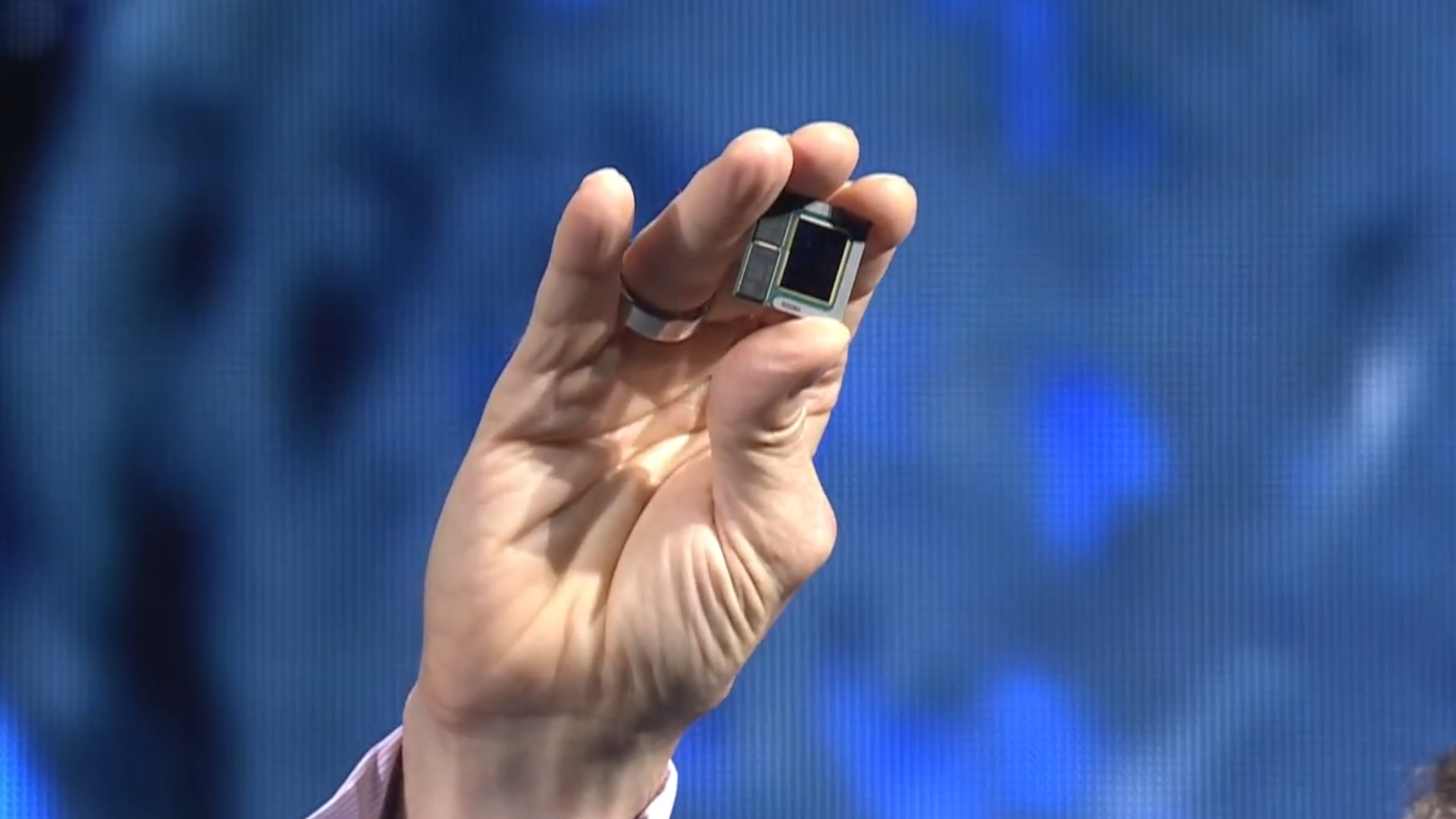

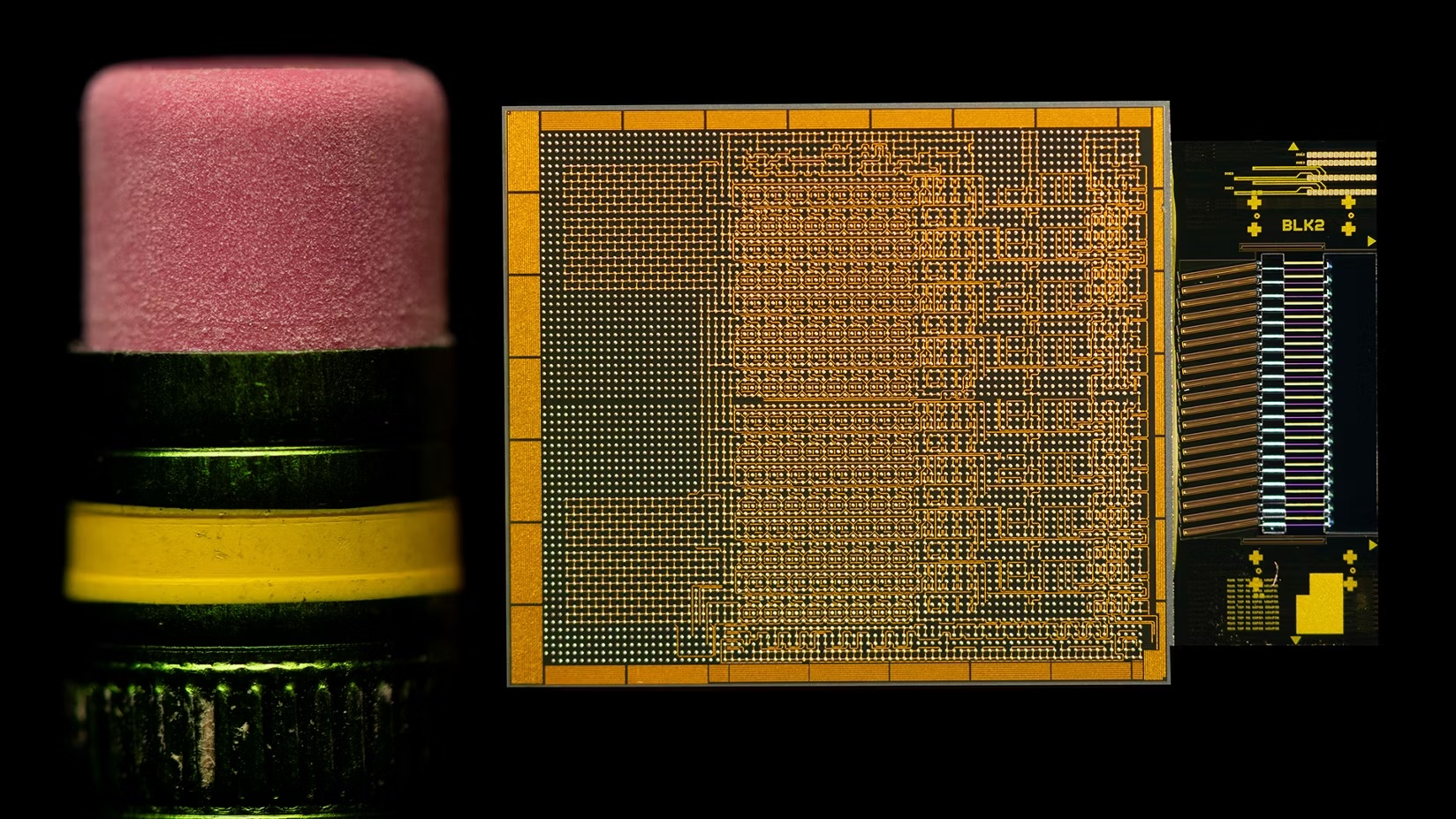

The prototype is the industry's first fully integrated bidirectional optical I/O chiplet. The optical compute interconnect (OCI) chiplet supports 64 channels of 32GBps data transmission in both directions over up to 100 meters of fiber optics. It is expected to address the growing demand for higher bandwidth in AI infrastructure with lower power consumption and a longer reach.

The chiplet also supports novel compute architectures like coherent memory expansion and can be attached to Intel CPUs and GPUs for future scalability. The chiplet integrates a silicon photonics integrated circuit (PIC) with on-chip lasers and optical amplifiers and supports 4TBps bidirectional data transfer compatible with PCIe Gen5 technology. And it consumes only 5 pico-Joules per bit, which is a third of the power draw of other chiplet designs like the pluggable optical transceiver module.

Intel is already working with customers to co-package the OCI chiplet design with systems-on-chips (SoCs) and system-in-packages (SiPs) integrated circuits.

The new fiber optic chiplet will change the AI industry

AI applications are being deployed with increasing frequency thanks to large language models (LLM) like Open AI's ChatGPT and generative AI like Stable Diffusion. As demand for AI applications increases, the need for larger, more efficient machine learning models will also increase. That means the industry needs to scale its computing platforms for the exponential growth of the AI market.

Because of AI, chips need to be more powerful and efficient. Intel is planning to start at the chiplet level with the OCI design, which is low-power, offers high transfer speeds, and can function over long distances, ideal for server architecture and cloud-based AI systems.

Sign up to receive The Snapshot, a free special dispatch from Laptop Mag, in your inbox.

The faster and more efficient the AI system can be, the lower the lag.

“The ever-increasing movement of data from server to server is straining the capabilities of today’s data center infrastructure, and current solutions are rapidly approaching the practical limits of electrical I/O performance," said Thomas Liljeberg, senior director of Product Management and Strategy at Intel’s Integrated Photonics Solutions Group.

"However, Intel’s achievement empowers customers to integrate co-packaged silicon photonics seamlessly interconnect solutions into next-generation compute systems, and increases reach, enabling ML workload acceleration that promises to revolutionize high-performance AI infrastructure.”

A former lab gremlin for Tom's Guide, Laptop Mag, Tom's Hardware, and TechRadar; Madeline has escaped the labs to join Laptop Mag as a Staff Writer. With over a decade of experience writing about tech and gaming, she may actually know a thing or two. Sometimes. When she isn't writing about the latest laptops and AI software, Madeline likes to throw herself into the ocean as a PADI scuba diving instructor and underwater photography enthusiast.